title: iOS音頻編程之變聲處理

date: 2016-06-09

tags: Audio Unit,變聲處理

博客地址

iOS音頻編程之變聲處理

需求:耳塞Mic實(shí)時(shí)錄音来农,變聲處理后實(shí)時(shí)輸出

初始化

程序使用44100HZ的頻率對原始的音頻數(shù)據(jù)進(jìn)行采樣,并在音頻輸入的回調(diào)中處理采樣的數(shù)據(jù)谒出。

1)對AVAudioSession的一些設(shè)置

NSError *error;

self.session = [AVAudioSession sharedInstance];

[self.session setCategory:AVAudioSessionCategoryPlayAndRecord error:&error];

handleError(error);

//route變化監(jiān)聽

[[NSNotificationCenter defaultCenter] addObserver:self selector:@selector(audioSessionRouteChangeHandle:) name:AVAudioSessionRouteChangeNotification object:self.session];

[self.session setPreferredIOBufferDuration:0.005 error:&error];

handleError(error);

[self.session setPreferredSampleRate:kSmaple error:&error];

handleError(error);

[self.session setActive:YES error:&error];

handleError(error);

setPreferredIOBufferDurations文檔上解釋change to the I/O buffer duration,具體解釋參看官方文檔。我把它理解為在每次調(diào)用輸入或輸出的回調(diào)逾滥,能提供多長時(shí)間(由設(shè)置的這個(gè)值決定)的音頻數(shù)據(jù)徐勃。但當(dāng)我用0.005和0.93分別設(shè)置它的時(shí)候,發(fā)現(xiàn)回調(diào)中的inNumberFrames的值并未改變礼患,一直是512是钥,相當(dāng)于每次輸入或輸出提供了512/44100=0.0116s的數(shù)據(jù)。(設(shè)置有問題?)

setPreferredSampleRate設(shè)置對音頻數(shù)據(jù)的采樣率缅叠。

2)獲取AudioComponentInstance

//Obtain a RemoteIO unit instance

AudioComponentDescription acd;

acd.componentType = kAudioUnitType_Output;

acd.componentSubType = kAudioUnitSubType_RemoteIO;

acd.componentFlags = 0;

acd.componentFlagsMask = 0;

acd.componentManufacturer = kAudioUnitManufacturer_Apple;

AudioComponent inputComponent = AudioComponentFindNext(NULL, &acd);

AudioComponentInstanceNew(inputComponent, &_toneUnit);

3)對AudioComponentInstance的一些初始化設(shè)置

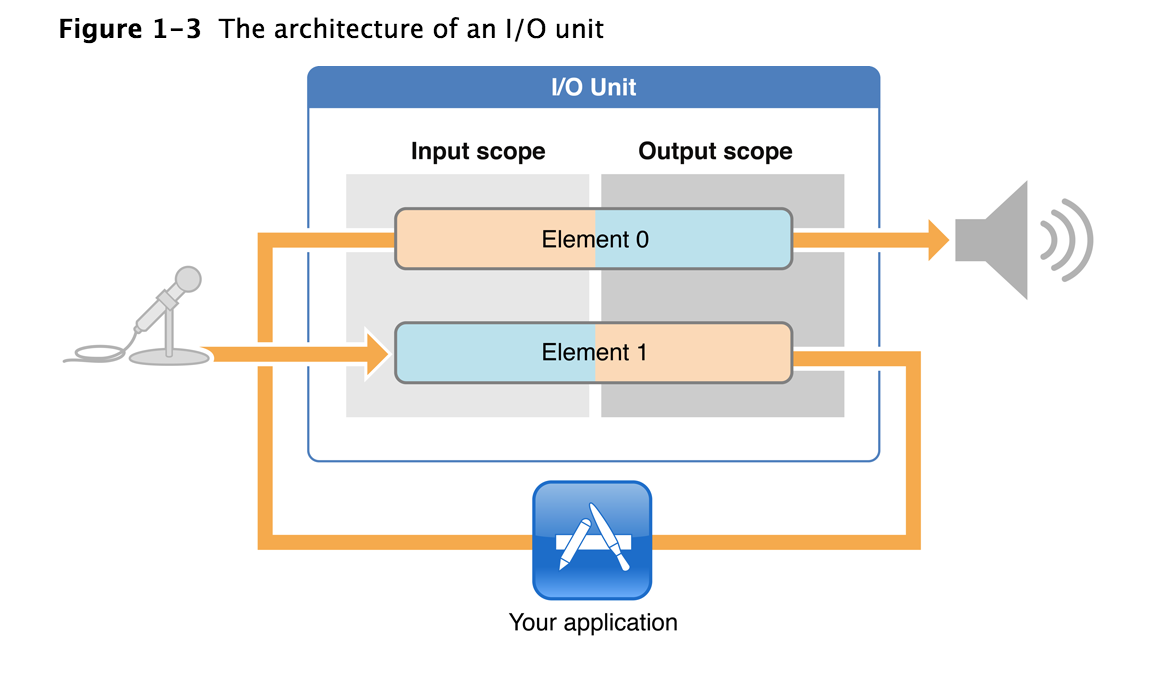

這張圖藍(lán)色框中的部分就是一個(gè)I/O Unit(AudioComponentInstance的實(shí)例).圖中的Element 0連接Speaker,也叫Output Bus;Element 1連接Mic,也叫Input Bus.初始化它悄泥,就是對再這些Bus上的音頻流的格式,設(shè)置輸入輸出的回調(diào)函數(shù)等肤粱。

UInt32 enable = 1;

AudioUnitSetProperty(_toneUnit,

kAudioOutputUnitProperty_EnableIO,

kAudioUnitScope_Input,

kInputBus,

&enable,

sizeof(enable));

AudioUnitSetProperty(_toneUnit,

kAudioOutputUnitProperty_EnableIO,

kAudioUnitScope_Output,

kOutoutBus, &enable, sizeof(enable));

mAudioFormat.mSampleRate = kSmaple;//采樣率

mAudioFormat.mFormatID = kAudioFormatLinearPCM;//PCM采樣

mAudioFormat.mFormatFlags = kAudioFormatFlagIsSignedInteger | kAudioFormatFlagIsPacked;

mAudioFormat.mFramesPerPacket = 1;//每個(gè)數(shù)據(jù)包多少幀

mAudioFormat.mChannelsPerFrame = 1;//1單聲道弹囚,2立體聲

mAudioFormat.mBitsPerChannel = 16;//語音每采樣點(diǎn)占用位數(shù)

mAudioFormat.mBytesPerFrame = mAudioFormat.mBitsPerChannel*mAudioFormat.mChannelsPerFrame/8;//每幀的bytes數(shù)

mAudioFormat.mBytesPerPacket = mAudioFormat.mBytesPerFrame*mAudioFormat.mFramesPerPacket;//每個(gè)數(shù)據(jù)包的bytes總數(shù),每幀的bytes數(shù)*每個(gè)數(shù)據(jù)包的幀數(shù)

mAudioFormat.mReserved = 0;

CheckError(AudioUnitSetProperty(_toneUnit,

kAudioUnitProperty_StreamFormat,

kAudioUnitScope_Input, kOutoutBus,

&mAudioFormat, sizeof(mAudioFormat)),

"couldn't set the remote I/O unit's output client format");

CheckError(AudioUnitSetProperty(_toneUnit,

kAudioUnitProperty_StreamFormat,

kAudioUnitScope_Output, kInputBus,

&mAudioFormat, sizeof(mAudioFormat)),

"couldn't set the remote I/O unit's input client format");

CheckError(AudioUnitSetProperty(_toneUnit,

kAudioOutputUnitProperty_SetInputCallback,

kAudioUnitScope_Output,

kInputBus,

&_inputProc, sizeof(_inputProc)),

"couldnt set remote i/o render callback for input");

CheckError(AudioUnitSetProperty(_toneUnit,

kAudioUnitProperty_SetRenderCallback,

kAudioUnitScope_Input,

kOutoutBus,

&_outputProc, sizeof(_outputProc)),

"couldnt set remote i/o render callback for output");

CheckError(AudioUnitInitialize(_toneUnit),

"couldn't initialize the remote I/O unit");

注意

kAudioUnitScope_Output/kAudioUnitScope_Input和kOutput/kInput的組合领曼。設(shè)置輸入輸出使能時(shí)鸥鹉,Scope_Input下的kInput直接和Mic相連,所以是選用它們兩;設(shè)置輸出使能也類似庶骄。而設(shè)置音頻的格式時(shí)毁渗,要選用Scope_Input下的kOutput和Scope_OutPut下的kInput,如果組合錯(cuò)誤,為會返回-10865的錯(cuò)誤碼单刁,意思說設(shè)置了只讀屬性灸异,而在官方文檔中也有說明,This hardware connection—at the input scope of element 1—is opaque to you. Your first access to audio data entering from the input hardware is at the output scope of element 1, output scope of element 0 is opaque。(疑問羔飞?在設(shè)置輸入輸出回調(diào)時(shí)以及Scope選擇Input和Output以及Global都可以肺樟,但是官方文檔中說Your first access to audio data entering from the input hardware is at the output scope of element 1)

音頻處理

預(yù)備知識

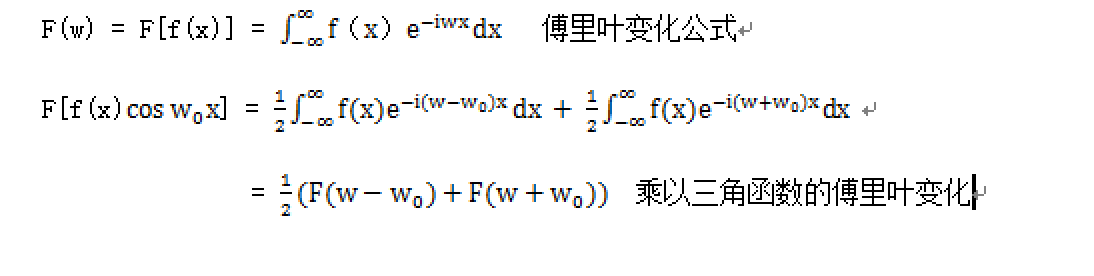

變聲操作實(shí)際是對聲音信號的頻譜進(jìn)行搬移,在時(shí)域中乘以一個(gè)三角函數(shù)相當(dāng)于在頻域上進(jìn)行了頻譜的搬移褥傍。但使得頻譜搬移了±??儡嘶。由下圖傅里葉變化公式說明

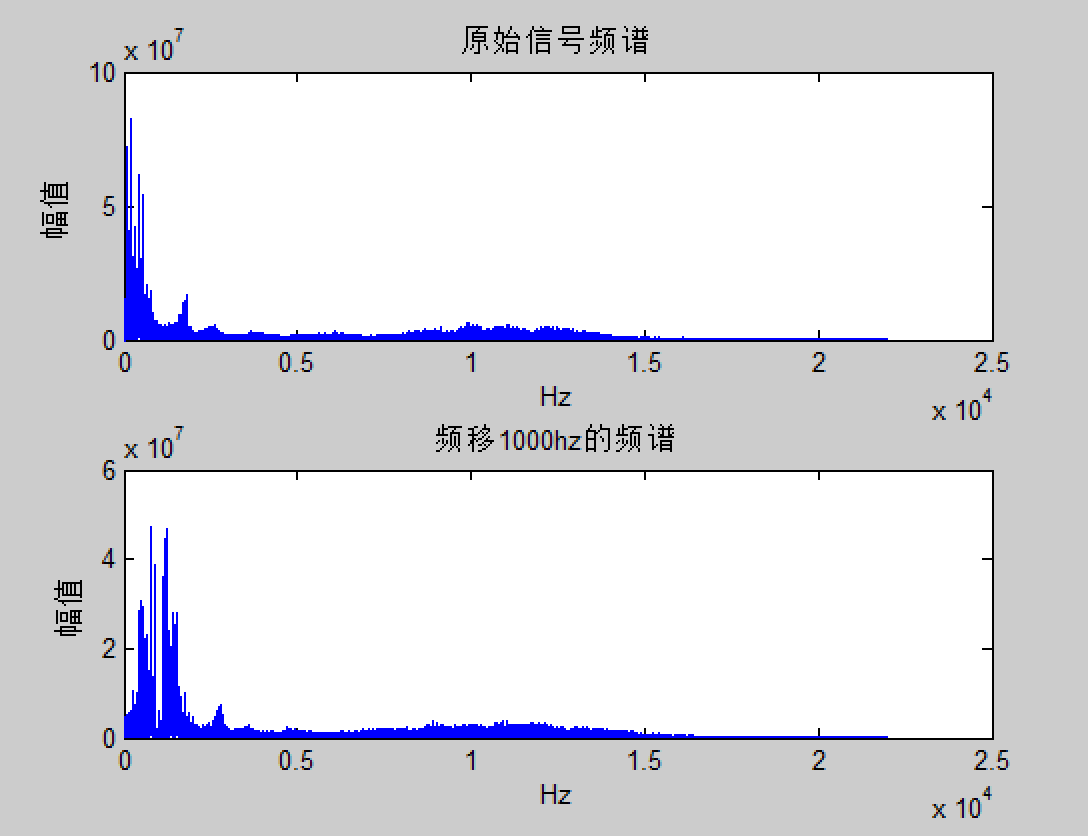

頻譜搬移后,要把搬移的F(w-w恍风。)的部分濾除蹦狂。將聲音的原始PCM放到Matlab中分析出頻譜,然后進(jìn)行搬移(實(shí)際上朋贬,我濾波這一步是失敗的凯楔,還請小伙伴們告知我應(yīng)該選一個(gè)怎樣的濾波器)

1)寫一個(gè)專門手機(jī)原始聲音數(shù)據(jù)的程序,將聲音數(shù)據(jù)保存到模擬上(用模擬器收集的聲音锦募,方便直接將寫入到沙盒中的文件拷出來)摆屯。

-

將聲音數(shù)據(jù)用matlab讀出來(注意模擬器和matlab處理數(shù)據(jù)時(shí)的大小端,專門把數(shù)據(jù)轉(zhuǎn)換讀出來看了,兩邊都應(yīng)該是小端模式)虐骑,并分析和頻移其頻譜

matlab代碼FID=fopen('record.txt','r');

fseek(FID,0,'eof');

len=ftell(FID);

frewind(FID);

A=fread(FID,len/2,'short');

A=A1.0-mean(A);

Y=fft(A);

Fs=44100;

f=Fs(0:length(A)/2 - 1)/length(A);

subplot(211);

plot(f,abs(Y(1:length(A)/2)));

k=0:length(A)-1;

cos_y=cos(2pi1000k/44100);

cos_y=cos_y';

A2=A.cos_y;

Y2=fft(A2);

subplot(212);

plot(f,abs(Y2(1:length(A)/2)));

原始信號的頻譜從0頻開始?頻率1000Hz后准验,慮除的就是小于1000hz的頻率?實(shí)際在我的程序中對頻譜只進(jìn)行了200hz的搬移廷没,那選一個(gè)大于200hz的IIR高通濾波器糊饱?

3)用matlab設(shè)計(jì)濾波器,并得到濾波器參數(shù).我用matlab的fdatool工具設(shè)計(jì)了一個(gè)5階的IIR高通濾波器颠黎,截止頻率為200hz另锋。導(dǎo)出參數(shù),用[Bb,Ba]=sos2tf(SOS,G);得出濾波器參數(shù)狭归。

4)得到的Bb和Ba參數(shù)后夭坪,可以直接代入輸入輸出的差分方程得出濾波器的輸出y(n)

音頻輸入輸出回調(diào)函數(shù)處理

1)輸入回調(diào)

OSStatus inputRenderTone(

void *inRefCon,

AudioUnitRenderActionFlags *ioActionFlags,

const AudioTimeStamp *inTimeStamp,

UInt32 inBusNumber,

UInt32 inNumberFrames,

AudioBufferList *ioData)

{

ViewController *THIS=(__bridge ViewController*)inRefCon;

AudioBufferList bufferList;

bufferList.mNumberBuffers = 1;

bufferList.mBuffers[0].mData = NULL;

bufferList.mBuffers[0].mDataByteSize = 0;

OSStatus status = AudioUnitRender(THIS->_toneUnit,

ioActionFlags,

inTimeStamp,

kInputBus,

inNumberFrames,

&bufferList);

SInt16 *rece = (SInt16 *)bufferList.mBuffers[0].mData;

for (int i = 0; i < inNumberFrames; i++) {

rece[i] = rece[i]*THIS->_convertCos[i];//頻譜搬移

}

RawData *rawData = &THIS->_rawData;

//距離最大位置還有mDataByteSize/2 那就直接memcpy,否則要一個(gè)一個(gè)字節(jié)拷貝

if((rawData->rear+bufferList.mBuffers[0].mDataByteSize/2) <= kRawDataLen){

memcpy((uint8_t *)&(rawData->receiveRawData[rawData->rear]), bufferList.mBuffers[0].mData, bufferList.mBuffers[0].mDataByteSize);

rawData->rear = (rawData->rear+bufferList.mBuffers[0].mDataByteSize/2);

}else{

uint8_t *pIOdata = (uint8_t *)bufferList.mBuffers[0].mData;

for (int i = 0; i < rawData->rear+bufferList.mBuffers[0].mDataByteSize; i+=2) {

SInt16 data = pIOdata[i] | pIOdata[i+1]<<8;

rawData->receiveRawData[rawData->rear] = data;

rawData->rear = (rawData->rear+1)%kRawDataLen;

}

}

return status;

}

在頻移的處理時(shí),本來要對頻移后的序列濾波的过椎,但是濾波后室梅,全部是雜音,所以刪除掉了這部分代碼潭流,在提供的完整代碼中有這部分刪除掉的代碼竞惋。存儲數(shù)據(jù)中循環(huán)隊(duì)列來存。

2)輸出回調(diào)

OSStatus outputRenderTone(

void *inRefCon,

AudioUnitRenderActionFlags *ioActionFlags,

const AudioTimeStamp *inTimeStamp,

UInt32 inBusNumber,

UInt32 inNumberFrames,

AudioBufferList *ioData)

{

ViewController *THIS=(__bridge ViewController*)inRefCon;

SInt16 *outSamplesChannelLeft = (SInt16 *)ioData->mBuffers[0].mData;

RawData *rawData = &THIS->_rawData;

for (UInt32 frameNumber = 0; frameNumber < inNumberFrames; ++frameNumber) {

if (rawData->front != rawData->rear) {

outSamplesChannelLeft[frameNumber] = (rawData->receiveRawData[rawData->front]);

rawData->front = (rawData->front+1)%kRawDataLen;

}

}

return 0;

}

以上實(shí)現(xiàn)了對音頻的實(shí)時(shí)錄入變聲后實(shí)時(shí)輸出灰嫉。沒有濾波拆宛,聽起來聲音有點(diǎn)怪。??????大學(xué)的時(shí)候?qū)W的數(shù)字信號處理已經(jīng)還給老師讼撒,關(guān)于信號處理這部分還請知道的小伙伴指點(diǎn)指點(diǎn),想實(shí)現(xiàn)男女聲音轉(zhuǎn)化的效果浑厚。